Legacy system migration has become one of the most deceptively complex responsibilities sitting on a CTO’s desk in 2025. Everyone talks about “digital transformation” as if migrating decades-old on-prem data into a modern cloud stack were simply a matter of running scripts, executing ETL jobs, and flipping a switch. Ignore the hype around “seamless cloud adoption.” The reality is far messier, far grittier, and ultimately far more political than technology leaders care to admit.

Migrating legacy data is not a technical project. It is an organizational event. It reshapes architecture, workflows, governance, compliance, vendor relationships, and risk models. One wrong move — a corrupted dataset, a misaligned schema, a permissions oversight, a missed dependency — can stall the entire business. And while cloud-native companies get to enjoy clean architectures and fresh data models, enterprises with 10, 20, or even 40 years of operational history must carefully disentangle layers of legacy logic baked deep into the system.

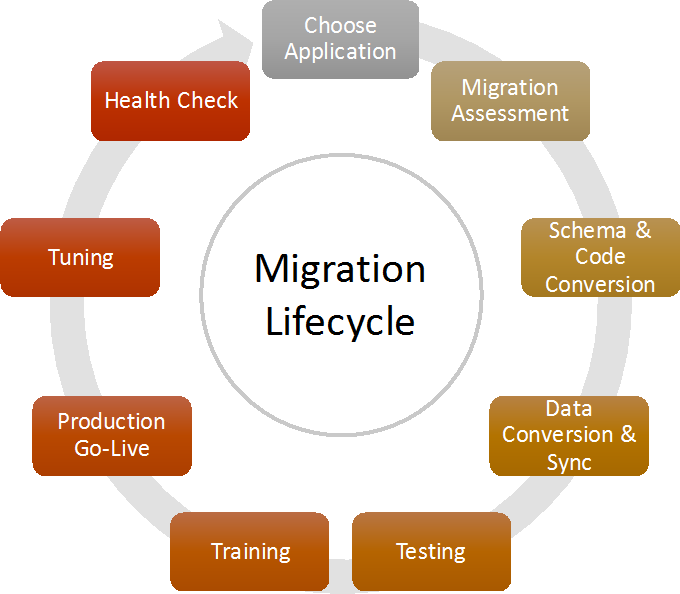

To anchor the conversation visually:

The Shift: Why Legacy Migrations Are Suddenly Mission-Critical

A decade ago, migrations were seen as “nice-to-have modernization projects.” In 2025, they’re survival-level infrastructure upgrades. The reasons are painfully obvious to CTOs:

- On-prem hardware is reaching end-of-life.

- Security requirements have escalated beyond what legacy environments can handle.

- AI and automation depend on accessible, clean, cloud-based data.

- Compliance frameworks demand better auditability than legacy databases can provide.

- Cross-team collaboration is impossible when data lives inside isolated, dying systems.

Picture a mid-sized enterprise still running a 2008 Oracle instance on old servers under someone’s desk, with dozens of undocumented stored procedures executing business-critical logic. Now picture executives demanding real-time reporting, machine learning, and integration with cloud services. This isn’t modernization — it’s an architectural rescue mission.

The Harsh Reality: Legacy Systems Are Never Just “Databases”

One of the biggest misconceptions I see is the belief that legacy systems contain “old data.” No. They contain old logic. Old decisions. Old assumptions. Old naming conventions. Old schema structures tied to long-gone business rules. And all of it must be understood, mapped, preserved, or intentionally discarded.

Data migration fails not because of bad cloud tools but because teams underestimate how much institutional memory is encoded in the legacy structure. You’re not just moving data — you’re decoding the past.

A CTO must therefore take a stance early: Will the organization replicate legacy behavior or rewrite it? Both paths are painful, but only one moves the company forward.

The Danger of “Lift and Shift” Thinking

“Lift and shift” sounds efficient until you realize you’ve simply dragged every historical flaw into a modern environment. Cloud infrastructure amplifies patterns. It doesn’t magically correct architectural debt.

Here’s a quick comparison illustrating why many migrations fail:

| Migration Approach | 🚀 Short-Term Benefit | ⚠️ Long-Term Risk |

|---|---|---|

| Lift-and-Shift | Fastest implementation | Reinforces legacy constraints in cloud |

| Partial Refactor | Reduces some complexity | Creates hybrid chaos if inconsistent |

| Full Re-architecture | Clean future-proof design | Higher upfront cost + organizational resistance |

The temptation is always to prioritize speed. The consequence is always paying in complexity later.

Why Migrations Break: The Unspoken Variables

Every CTO knows data types, schemas, and pipelines matter. But migrations rarely break because of technical complexity alone. They break because of organizational blind spots:

- Missing domain experts who understand legacy rules

- Terrible documentation (or none at all)

- Undiscovered dependencies between systems

- Permission gaps

- Compliance models not mapped to the new infrastructure

- Political fights over who “owns” the data

- Stakeholders expecting zero downtime

- Teams believing cloud equals simplicity

Legacy migration turns into a minefield when no one fully understands how the system actually behaves under load or in edge cases.

To be frank, the most dangerous phrase uttered during a migration is:

“I think this is the last place that field is used.”

Mapping the Journey: From On-Prem Chaos to Cloud Architecture

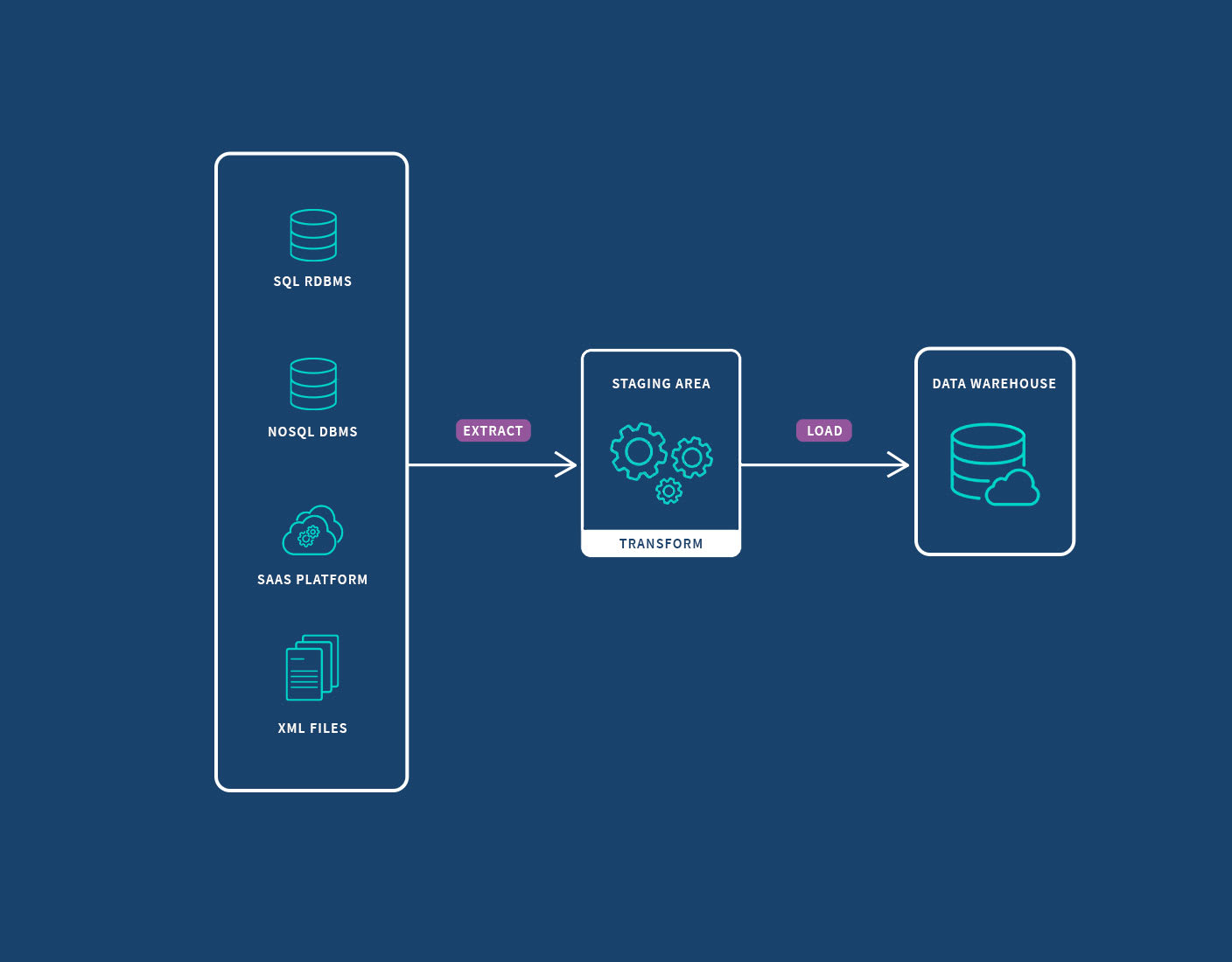

Let’s visualize the migration lifecycle in a way even seasoned leaders sometimes forget:

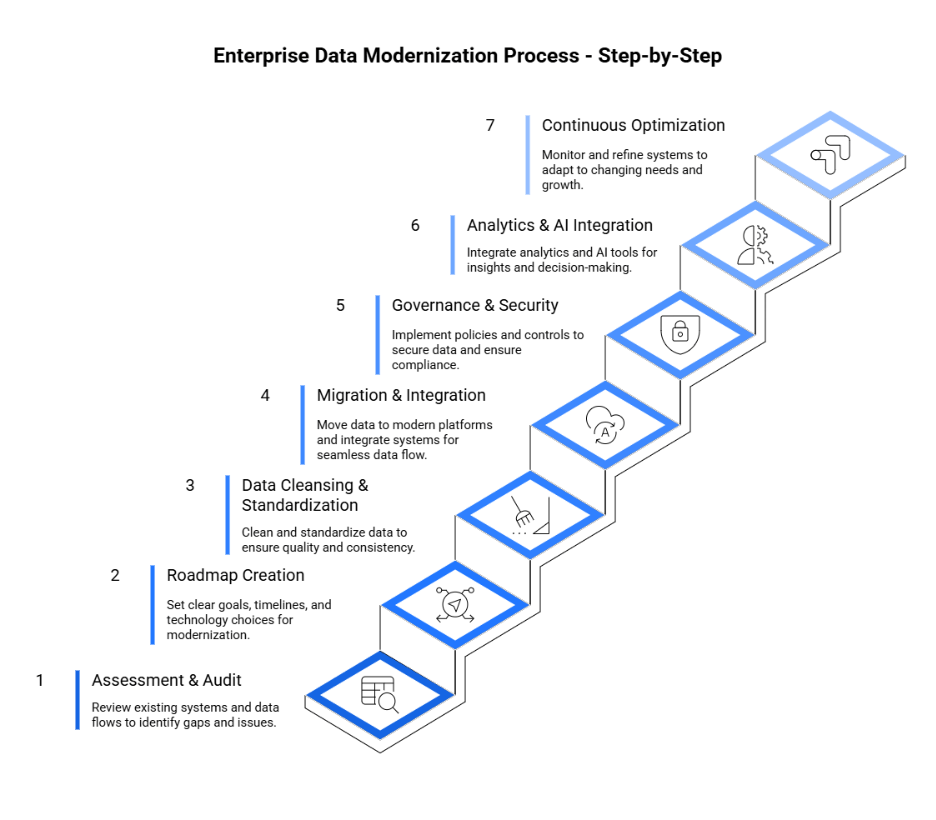

The migration journey always follows this arc:

- Assessment: What exists, what’s broken, what’s undocumented, what must survive.

- Extraction: Pulling data out without corrupting or altering business logic.

- Transformation: Cleaning, normalizing, refactoring, revalidating.

- Validation: Ensuring nothing mission-critical got lost in translation.

- Deployment: Moving to the cloud with correct governance and access layers.

- Monitoring: Ensuring the new environment behaves predictably.

Each step requires different teams, different tools, and different mindsets. You can’t brute-force a migration; you orchestrate it.

The Most Underrated Component: Governance Before Migration

Most organizations start migrations with data extraction. That’s a mistake. They should start with governance. Without governance, your cloud stack becomes a polished version of your legacy mess.

Governance includes:

- Data ownership

- Access control

- Compliance mapping

- Retention and archival strategy

- Audit trails

- Monitoring

- Naming conventions

- API and schema versioning rules

This is precisely where modern cloud infrastructure excels — not in storage or compute but in control.

One of the first questions I ask CTOs is:

“If a regulator asked for a complete access audit of your legacy data, could you produce it?”

The answer is almost always no — which tells you everything about the urgency of migrating.

Designing a Cloud Architecture That Doesn’t Break Under Real Use

Cloud architecture isn’t just lifting data into S3 or BigQuery. It’s rethinking:

- Ingestion pipelines

- Data modeling

- Streaming vs batch logic

- ETL/ELT design

- API structures

- Idempotency of operations

- Failure recovery paths

- Logging granularity

A migration is your one chance to fix the mistakes of the past. Many organizations waste that opportunity by over-relying on tools instead of design.

Here’s an architectural comparison that often clarifies direction:

| Architecture Style | ☁️ Cloud Strength | ⚠️ Migration Risk |

|---|---|---|

| Data Lake | Flexible, scalable, cost-efficient | Becomes a “data swamp” without governance |

| Data Warehouse | Strong consistency + analytics | Requires heavy modeling upfront |

| Lakehouse | Hybrid flexibility + governance | Still new, tooling varies in maturity |

Choosing the wrong architecture creates decades of regret. No pressure.

The Hardest Decision: What Data Should Not Be Migrated?

Legacy environments are full of:

- Redundant data

- Corrupted data

- Compliance-violating data

- Forgotten test databases

- Historical logs with no business value

- Custom tables no one remembers creating

A mature migration strategy involves intentional discarding — not just moving everything blindly.

CTOs must take a bold stance:

“If we don’t know what it is, we don’t migrate it.”

Because the cloud magnifies everything. Including garbage.

On Downtime, Expectations, and Organizational Politics

Leadership loves to say: “We need zero downtime.”

That’s admirable. Also unrealistic for many legacy architectures.

The real challenge isn’t technical. It’s political:

- Sales wants 24/7 access.

- Finance demands accuracy.

- Ops need consistency.

- Compliance requires traceability.

- IT wants sane deployment schedules.

The migration timeline becomes a negotiation. And every department will claim their system is “mission-critical.” The CTO becomes a diplomat holding a grenade.

Cloud Migration Myths CTOs Must Reject

The cloud does not make your data cleaner.

The cloud does not fix broken schema logic.

The cloud does not simplify bad architectural decisions.

The cloud does not erase technical debt — it relocates it.

Migrating legacy data is not a magic trick. It’s a controlled demolition followed by a reconstruction.

The Most Important Migration Principle: Build the Exit Before the Entry

This principle comes from enterprise consulting:

If you can’t extract your data cleanly, you don’t own your system.

Before moving to any cloud vendor, ensure you can:

- Export all data without transformation

- Rebuild schemas independently

- Reconstruct audit logs

- Shift pipelines flexibly

- Restore data without vendor tools

If you can’t reverse the migration, your cloud provider owns you.

A Real Example: When Proper Migration Saved a Company Millions

A logistics company preparing for international expansion discovered their decade-old SQL Server instance had quietly become the backbone of their operations. Any downtime would halt shipping, invoicing, and tracking.

Their migration took nine months because:

- Schema conflicts emerged

- Dependencies were undocumented

- Legacy business rules contradicted new workflows

- Data quality required months of cleansing

But once completed, they unlocked:

- Real-time analytics

- Faster API-driven integrations

- Automated forecasting

- Streamlined compliance

- Dramatic reduction in maintenance costs

And, perhaps most importantly, they reclaimed architectural control.

A Final Thought

Legacy migration isn’t about technology. It’s about preparing your company for the next ten years of growth, automation, and innovation. Old systems become invisible anchors. New cloud infrastructure becomes scaffolding for speed and scale. But only if the migration is intentional.

So here’s the question every CTO should confront before beginning:

Are you migrating data — or migrating the assumptions that have been quietly limiting your architecture for years?